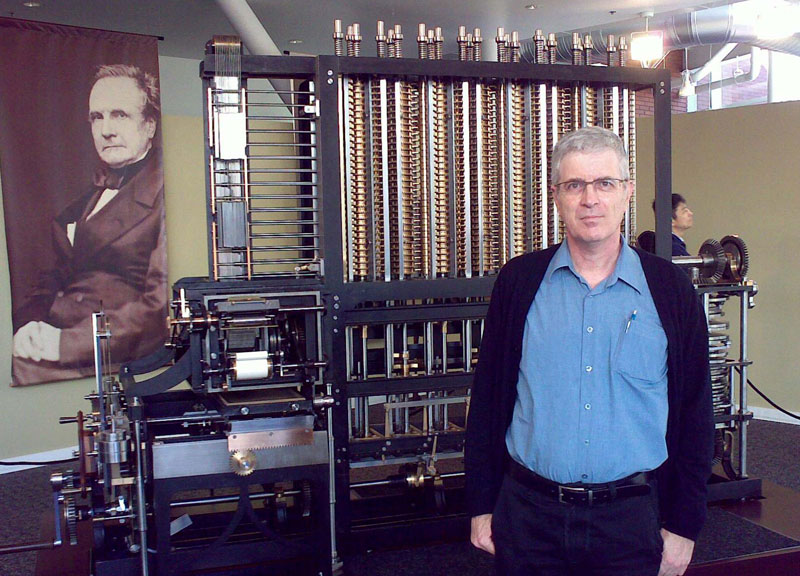

A younger me at the Babbage difference engine built by the Science Museum

Success has many fathers, and so it is hardly surprising that there are numerous claimants to the title “inventor of the computer”. These include innovators like Aiken (constructor of the Harvard Mark I, 1944), Zuse (Z1, 1938), Atanasoff and Berry (ABC, 1942), Flowers (Colossus, 1943), and Mauchly and Eckert (ENIAC, 1946).

But two men stand out, head and shoulders above all of them: Charles Babbage and Alan Turing. These two Englishmen invented the computer from scratch, but unlike the others, both failed to construct an actual machine in their lifetime. Nevertheless, their achievement in envisioning, designing and documenting their inventions was a huge intellectual triumph, and in Turing’s case it was also the basis for all the machines that followed, including the microprocessors we all carry on – and in some cases, inside – our persons.

The two have invented the computer independently of each other; and it is interesting to compare how they went about it. Though both were accomplished scientists, they had different personalities and different interests; and they took very different paths, not just in terms of the technology used but – more interestingly – in the philosophical approach taken to begin with. Their development methodology differed too, as did the causes of their failure to realize their respective visions. These differences are the subject of this article.

Charles Babbage and his engines

Charles Babbage (1791–1871) was one of the smartest scientists in Victorian England. He made significant contributions in many fields of knowledge: Mathematics, Economics, Cryptography, Mechanics, and much more (this kind of wide scope was customary in those days; it was referred to as being a Philosopher). But he is best known (though to far fewer people than he deserves) for his attempt to build computing machines based on a myriad of cogwheels, cams and levers.

Babbage started this quest in 1822, aged 31, when his frustration with the errors that plagued hand-calculated tables of mathematical functions led him to define a project to build a “Difference Engine”, a huge calculator that would spit out plates for printing the tables of the function you set it up to compute. The machine would contain 25,000 parts, a major undertaking, and the British government gave him a hefty sum to realize this vision. Babbage was making good progress, and had demonstrated a part of the machine in 1832, when he had the great misfortune of having a better idea: he realized that he could build a machine that could calculate anything whatsoever that you cared to program it to compute; basically, a general purpose computer, which he called the Analytical Engine. Why was this a misfortune? Because the Better, as the saying goes, is the enemy of the Good. In Babbage’s case he went to the government and proposed that they write off the half-done Difference Engine (which was well above budget and behind schedule) and give him even more money to explore his new idea. You can imagine what the response was; he lost all his funding, and spent the rest of his life and personal fortune trying to get the new machine built on his own. This he failed to complete, and so he died a bitter man without seeing either of his machines finished.

And yet Babbage’s achievement is mind boggling. His Analytical Engine is a true computer, complete with central processor, memory, punched cards, printer, a programming language with subroutines and conditional branching, and so on. He left us numerous notebooks with incredibly intricate designs for the many mechanisms of both his engines, as well as the theory underlying their architecture. Doing all that is a task for the likes of Intel or IBM; to do it alone a century before its time was a huge intellectual breakthrough – a tragic one, since it was completely forgotten by the 20th century and had no impact whatsoever on the invention of the computers we use today.

Alan Turing and the birth of Computer Science

Alan Mathison Turing (1912–1954) also sank into general oblivion after his death, but was brought back into the public eye after they made a movie about his WW2 achievements around the cracking of the Enigma code. His contributions to computing, however, straddle the war period, and they are seminal. It is fair to say that Turing has laid the basis for almost anything important in today’s computer science.

Aged only 24, Turing published in 1936 an esoteric mathematical paper (“On computable numbers, with applications to the Entscheidungsproblem”) where he needed a model that captures what may be computed by a machine; this theoretical model, later named a Turing Machine, is at the heart of every computer on planet Earth today. After the war Turing went on to prepare a detailed design for an electronic computer realizing the abstract ideas of his earlier paper. This gave a big boost to the development of the first large computers in the UK, although Turing himself was pushed out from that work – at first because his superiors refused to believe his design was feasible, and later because he was outed as a homosexual and his access to computers was revoked following the barbarous attitudes of that era. He died two years after this, probably by his own hand.

Two paths to innovation

It is fascinating to compare the innovative processes of these two pioneers, which differ in multiple ways.

Babbage had a specific and very practical purpose that triggered his efforts: he wanted to compute error-free tables. Turing was motivated by a different goal: he wanted to understand the mathematical underpinnings of the human brain. He says this explicitly in a letter to a friend: “In working on the ACE I am more interested in the possibility of producing models of the action of the brain than in the practical applications to computing”. In fact, soon after this Turing published the paper “Computing machinery and intelligence”, which signals the founding of Artificial Intelligence as a scientific field, and where the now-famous imitation game is used to test for computer intelligence.

This difference in goals leads to different development processes. Turing developed the Turing Machine model by deconstructing the brain – his 1936 paper starts from a person computing by hand, and derives a simplified model of the thought process involved. Babbage simply looked at the mathematical actions required – addition, tens carry, comparison, etc. – and devised mechanisms that would make them happen. He never considered his computing engines as anything analogous to a brain.

And another difference: Turing was primarily a theoretician, a mathematician in fact, and his contributions are mostly captured on paper. He did have a practical streak – he really was fascinated by the challenges of implementing his theories in actual electronic circuitry – but that was secondary to his theoretical inquisitiveness. Babbage, at least where computing was concerned, was entirely a constructor, a geek, a maker on a grand scale. His interest and approach was to get funding, contract with a workshop and build machines that actually worked and produced valuable outcomes.

And it is therefore no surprise that the two had different future plans for their brainchildren. Turing philosophized about the significance of his invention, extending its hypothetical future potential to playing chess, machine learning, natural language processing and other expressions of intelligence. Babbage was too busy to speculate beyond mathematical computation. (His assistant, the Lady Ada, Countess of Lovelace, did see further: she actually wrote about the Analytical Engine’s potential to create musical patterns. But even she qualified her vision by stating that the analytical engine “will never be creative or intelligent by itself; it can only do what we tell it to do”.)

Two paths to failure

As I said, neither Babbage nor Turing succeeded in implementing their designs. Turing at least saw his design built by others; Babbage didn’t see his realized at all. Two failures, but here too the causes differ.

Much has been written about why Babbage had failed, given the basic soundness of his designs. The easy answer, that he was before his time, is an excuse: the Science Museum’s Difference Engine uses techniques and materials that were available to Babbage in his day and the machine works quite well. A more likely cause is Babbage’s dismal human relations skills. He had an ego, and he made no secret of his negative views about scientific and political leaders that were critical for securing his funding – with the results you’d expect. He also managed to quarrel with Joseph Clement, his chief mechanic, to the point of stopping the work. And when the Lady Ada offered to take care of external relations for him (which might well have made a big difference), he rejected the offer. Obstinate and quarrelsome, Babbage painted himself into a corner time after time.

Alan Turing did not have such problems. He was an introverted man, but he could get along with people when needed. His failure to build a computer was mostly a byproduct of the kingdom’s antiquated views and laws concerning homosexuality; computers were considered a security related technology, and the view in those days was that gay men would be prone to blackmail, hence the loss of his access to computing projects. It didn’t help that after he was found guilty, he was made to take estrogen pills to “cure” his condition, and among their side effects they reduced his mental acuity. We might excuse the British government of 1842 for pulling Babbage’s funding given the project’s history, but not that of 1952 for drugging the country’s leading computer scientist and the hero of Bletchley Park, driving him to eventually take his own life.

And even before his removal, Turing was handicapped by another aspect of the government’s way of doing things: he proposed a full design for a modern electronic computer, the ACE, but management at the UK’s National Physics Laboratory rejected it because they didn’t believe such a large tube-based machine would work reliably; and Turing couldn’t tell them that he’d seen it done at Bletchley Park during the war, because he was sworn to secrecy. Government paranoia prevented the knowledge transfer that would have made his plan acceptable.

Vindication

Both Alan Turing and Charles Babbage sank into obscurity after their death. It took some decades for Turing to get his well deserved fame; Babbage had to wait over a century to be rediscovered, and still has a way to go.

In the case of Turing, his contribution is now fully recognized, as is the wrong that had been done to him. Babbage’s vindication is still in progress. The Science Museum in London built the Difference Engine in 1991, working from his blueprints, and it works perfectly; the Analytical Engine may yet be realized by the crowdsourced Plan 28 project, but it will take a while – it was a truly complex vision.

Related posts

Alan Turing’s Earthshaking Philosophical Insight

Thank you. You might say, then, all things being equal, without Turing, the modern computer and the field of computer science does not exist; whereas the same cannot be said of Babbage.

Thanks Nathan….where would you place John von Neumann in this venn digram 🙂

Well, Von Neumann was a man of great achievements, but he certainly did not invent the computer. He did come up with the stored program architecture we use to this day, and deserves much honor, but I’d put him on a different level than either Turing or Babbage.