Those of us who grew up with 1960’s Science Fiction remember well Frank Herbert’s Magnum Opus, Dune. That amazing book laid the groundwork for much environmentalist thinking, and has been an inspiration for an entire generation of fans. The story’s feudal interstellar society, taking place thousands of years in our future, has very advanced capabilities, notably faster than light travel; yet one thing is missing: there are no computers anywhere (except for the mentats, who are specially trained human computers).

The reason there are no computers in Dune’s universe is hinted at repeatedly in the book: it is the outcome of the Butlerian Jihad that had happened millennia earlier, a violent crusade against computers, thinking machines, and conscious robots. This technology remained taboo, and the commandment “Thou shalt not make a machine in the likeness of a human mind” became deeply ingrained in subsequent human societies.

It is likely that Herbert named this fictional Jihad after Samuel Butler, a 19th century novelist whose article “Darwin among the machines” had introduced the concept of machines as the next stage in the mineral-vegetable-animal progression. Butler had expected the inevitable evolution of machines to reach the point where they would surpass the human race to become “man’s successor to the supremacy of the earth”. He concluded by opining that “war to the death should be instantly proclaimed against them. Every machine of every sort should be destroyed by the well-wisher of his species”. But he also admits that “if it be urged that this is impossible under the present condition of human affairs, this at once proves that the mischief is already done, that our servitude has commenced in good earnest, that we have raised a race of beings whom it is beyond our power to destroy, and that we are not only enslaved but are absolutely acquiescent in our bondage”.

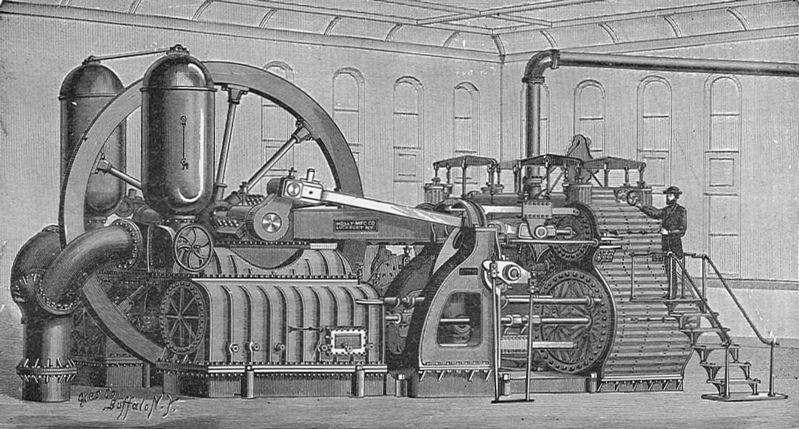

All this seems quaint, considering that the machines in Butler’s lifetime were driven by steam; yet it is worth noting that his thinking completely presages what we are facing today with machines driven by microchips. Our machines too are evolving (though much more rapidly); we are becoming completely dependent on them; and the future of our interaction with them is causing growing concern among thinkers like Stephen Hawking (“ The development of full Artificial Intelligence could spell the end of the human race”) and Elon Musk (“Mark my words: A.I. is far more dangerous than nukes… it scares the hell out of me”).

So should we take Butler’s advice and instantly proclaim war to the death against thinking machines? Or are we at the point where “this is impossible under the present condition of human affairs”? Indeed, we are at that point, though this is frequently denied when I explain the dangers of AI to people: they can’t see a future where intelligent computers or robots take over because “we can always turn them off”. The problem is, we can’t even turn them off today, when they’re dumber than us; we are already totally dependent on computers, on the internet, and increasingly on AI tools for our commerce, our food production and distribution, and every branch of our technology. Turning the global computer network off would create chaos and disaster; it’s hard to imagine what would happen, but billions might die before humanity can revert to the pre-computer era (when the planet had supported a third of the current population). We have, indeed, “raised a race of beings whom it is beyond our power to destroy”.

There is, however, one difference between Butler’s machines and ours: the machines he envisioned were neither intelligent, nor conscious. In fact, in a rather amusing passage he describes their supremacy as grounded in their freedom from evil passions, jealousy, avarice, sin, shame, sorrow, or any impure desires that might disturb their serene might. And while he hints at a possible future when machines will be able to reproduce without any human intervention, he mostly assumes that humans will service the machines, who will maintain a pleasant symbiosis with us. But the AI machines we see in our future are definitely intelligent (and possibly conscious), and there is no telling what they’ll decide to do about humanity. When Hawking said that AI could spell the end of the human race, he wasn’t worrying about symbiotic servitude; he was thinking about extinction.

Whether this conflict with AI will happen, who will win it, and whether a Butlerian Jihad will take place after all remains to be seen. We can only hope that the historians who document it in retrospect will be human.

Related posts

Artificial Intelligence: Where We are and Where We’re Headed

What dangers do you foresee? Is it that the AI will turn on us? Because that is an unsupported and paranoid notion based entirely on anthropocentric thinking. Herbert’s vision was brilliant in that he didn’t believe this would happen. He predicted humans would revolt against thinking machines because of how it was misused and because they’d grown restless and tired of their dependency on them. And with it went the whole machine mentality, automation, and the very basis of an open society.

Hence why the Imperium emerged, and humanity reverted to a feudal culture that grew incredibly stagnant and was headed for extinction. Herbert wasn’t portraying the Jihad as a good thing but as an inevitable outcome of modernization and automation.